Getting Started

Overview

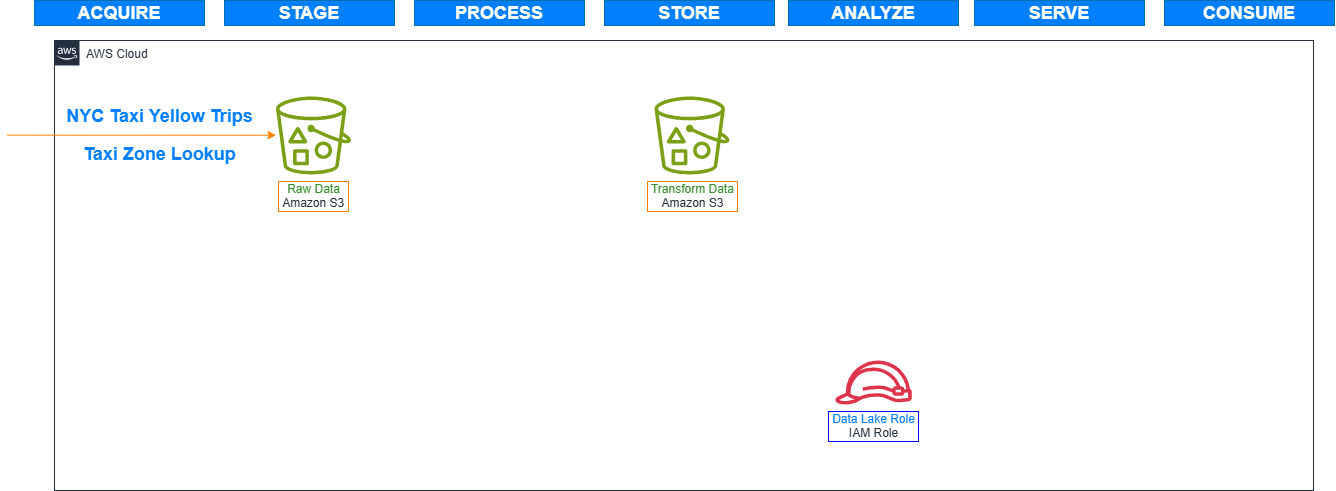

In preparation for the workshop, we have created Amazon S3 buckets, copied sample data files to Amazon S3, and created an IAM service role.

What is a Data Lake?

A data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. You can store your data as-is, without having to first structure the data, and run different types of analytics—from dashboards and visualizations to big data processing, real-time analytics, and machine learning to guide better decisions.

Introducing Amazon S3

Amazon Simple Storage Service (S3) is the largest and most performant object storage service for structured and unstructured data and the storage service of choice to build a data lake. With Amazon S3, you can cost-effectively build and scale a data lake of any size in a secure environment where data is protected by 99.999999999% (11 9s) of durability.

With data lakes built on Amazon S3, you can use native AWS services to run big data analytics, artificial intelligence (AI) and Machine Learning (ML) to gain insights from your unstructured data sets. Data can be collected from multiple sources and moved into the data lake in its original format. It can be accessed or processed with your choice of purpose-built AWS analytics tools and frameworks. Making it easy and quick to run analytics without the need to move your data to a separate analytics system.

Deploy a CloudFormation Template

Some of the assets provided in this workshop were designed with Intended Vulnerabilities. Please USE WITH CAUTION as these assets are for Training Purposes only. If you run this workshop using your own AWS Account, just be aware that it will incur costs until you clean up the deployed AWS resources.

We will first set up required resources for this lab. We will be creating S3 buckets to store the files and appropriate IAM roles for the services that we will be using. These resources are been defined in a CloudFormation template.

- Go to CloudFormation in AWS Console.

- In the right navigation menu, click Create stack, With new resources (standard).

- Download this file: CFN Template

- Select Upload a template file, and upload the file you downloaded.

- Click Next.

- For stack name, specify

sdlj-workshop. - Click Next until Review and create page.

- Check I Acknowledge that AWS CloudFormation might create IAM resources with custom names.

- Click Submit.

- Wait for resources to be provisioned.

In the following sections, we will delve into Discovering and Cataloging your Data